Introduction

Communication barriers due to the complexities of English are a real challenge. English, with its nuances, makes global understanding difficult. Here's a starting point for a solution: a simple language translation app with Flask and Tesseract OCR. It's a user-friendly way to begin addressing the challenges posed by English's intricacies in image translation. Say goodbye to language barriers and welcome the start of a journey to effortlessly translate visual content into a universally understood language. Welcome to a simplified language translation beginning.

Understanding the tools:

I have leveraged the familiar tools Flask and Deep-translate in the development of my latest project, building upon the foundation laid in my previous exercise creating a translation API. Feel free to explore the progress at @python-api-for-seamless-translation-using-deep-translator-and-flask.

OpenCV-python

OpenCV-Python is a powerful open-source library for computer vision tasks. With a range of functions, it simplifies image and video processing for applications like machine learning. Its compatibility with Python makes it a versatile choice for tasks like object detection and feature extraction, contributing to the efficiency of computer vision projects.

Tesseract OCR

Tesseract OCR is a state-of-the-art optical character recognition engine developed by Google. Renowned for its accuracy, Tesseract excels in extracting text from images, making it a vital tool for various applications. Its versatility spans multiple languages, making it a go-to choice for recognizing diverse text content. Integrating seamlessly into projects, Tesseract OCR plays a crucial role in transforming visual information into editable and machine-readable text, enhancing the efficiency of applications in fields like document analysis, text extraction, and automated data processing.

pytesseract

Pytesseract is a Python wrapper for Tesseract OCR, providing a convenient interface for utilizing Tesseract's capabilities in Python applications. This library simplifies the integration of Tesseract into Python scripts, making it easier for developers to perform optical character recognition on images. With pytesseract, tasks like extracting text from images become more accessible, allowing for seamless integration into various projects, from automating data entry to enhancing text-based applications.

Let's start our Journey

Step 1: Installing Tesseract

You can install Tesseract from here or you can also search in Google how to install your desired version of Tesseract

Step 2: Installing packages

pip install flask

pip install opencv-python

pip install pytesseract

pip install deep-translator

Step 3: Create a template

Create a folder name templates in the project directory and create a new file named translator.html which will contain the form to upload images and select the desired language in which to translate. ( please remember to put the html file inside the templates folder or it can't and won't be rendered )

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>Document</title>

</head>

<body>

<div>

<form action="/main" method="post" enctype="multipart/form-data">

<input name="file" type="file" />

<select name="language" >

<option></option>

</select>

<button type="submit">Submit</button>

</form>

</div>

</body>

</html>

we would need the available languages and how to show the values of the languages which I'll explain in the upcoming sections

Step 4: Create a main file

Create a new file named main.py in your project directory. This will be the main Python file for your Flask application.

Import necessary libraries

from flask import Flask, request, redirect, jsonify , render_template from deep_translator import GoogleTranslator import json from pytesseract import * import cv2Import the supported languages Json

Create a data folder in the project directory and put the languages.json inside, use json.load to load the file inside the app

languages = json.load(open("./data/languages.json","r"))Create an App and define the path for Tesseract.exe

app = Flask(__name__) pytesseract.tesseract_cmd = 'path_to_tesseract.exe'Create a route for get and post

The get method will render the translator.html which contains the form for image upload. We can pass the language data to the form options

@app.route('/main', methods=["GET","POST"]) def translate(): if request.method == "GET": return render_template("page.html" , data=languages)Pass the data in the option in translator.html

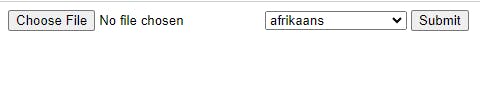

<select name="language" > {% for key in data %} <option value="{{data[key]}}">{{ key }}</option> {% endfor %} </select>The form will look like this

The post method

1. Get the file and language to translate

if request.method == "POST" :

file = request.files['file']

language = request.form.get("language")

2. Read the image and convert the bgr version of the image in opencv to rgb version which is common in many applications

images = cv2.imread(file.filename)

rgb = cv2.cvtColor(images, cv2.COLOR_BGR2RGB)

3. Conversion of image to data

results = pytesseract.image_to_data(rgb, output_type=Output.DICT)

pytesseract.image_to_data: This function is part of the pytesseract library, which is a Python wrapper for the Tesseract OCR engine. The image_to_data function is specifically designed for extracting structured information about text in an image.

rgb is the input image in RGB format which is attained by the OpenCV library

output_type= Output.DICT : This parameter specifies the output format for the results. By setting it to Output.DICT, the function returns a dictionary containing various information about the identified text regions, such as the text content, coordinates, and other details.

4. Loop through the results["text"] and create a sentence

all_text = []

for i in range(0, len(results["text"])):

text = results["text"][i]

text = "".join(text).strip()

all_text.append(text)

line = " ".join(all_text)

5. Pass the line to the googletranslator from deep-translator and return the result

translated = GoogleTranslator(source='auto', target=language).translate(line)

return translated

The overall code will look like this.

from flask import Flask, request, redirect, jsonify , render_template

from deep_translator import GoogleTranslator

import json

from pytesseract import *

import cv2

app = Flask(__name__)

languages = json.load(open("./data/languages.json","r"))

pytesseract.tesseract_cmd = 'C:\\Users\\Kesavan\\AppData\\Local\\Programs\\Tesseract-OCR\\tesseract.exe'

@app.route('/main', methods=["GET","POST"])

def translate():

if request.method == "GET":

return render_template("page.html" , data=languages)

if request.method == "POST" :

file = request.files['file']

language = request.form.get("language")

images = cv2.imread(file.filename)

rgb = cv2.cvtColor(images, cv2.COLOR_BGR2RGB)

results = pytesseract.image_to_data(rgb, output_type=Output.DICT)

all_text = []

for i in range(0, len(results["text"])):

text = results["text"][i]

text = "".join(text).strip()

all_text.append(text)

line = " ".join(all_text)

translated = GoogleTranslator(source='auto', target=language).translate(line)

return translated

if __name__ == '__main__':

app.run(debug=True)

Result:

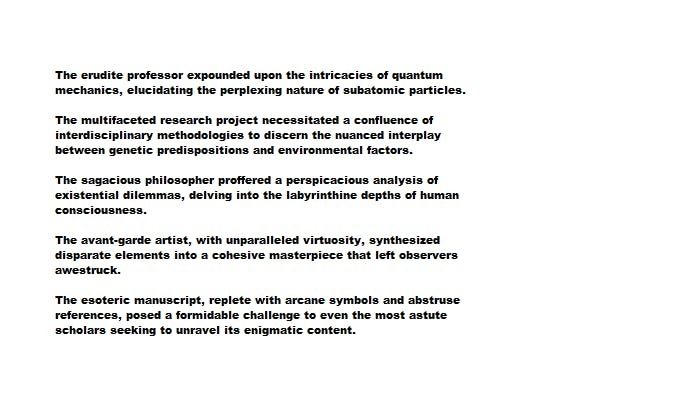

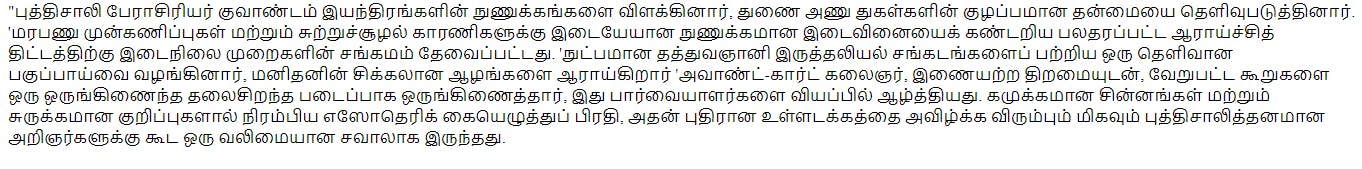

The below image is a set of English sentences that consists of many complex words which are translated into Tamil.

Conclusion

To sum up, our journey has been about building a simple language translation tool using Flask, Tesseract OCR, and Deep-translate. We learned how OpenCV-Python helps with images, and Pytesseract makes text extraction easy. The provided code guides you through creating your translation app, opening doors for more features. This basic setup is ready for you to explore and improve, making global communication easier.